“Unbreakable.”

“Absolutely secure.”

“No one can see this.”

Statements like these sound reassuring. They sell a sense of control, stability, and safety – exactly what organizations are looking for in an increasingly connected world. In IT security and cybersecurity, however, such superlatives are not only misleading, but potentially dangerous. What they ultimately replace is transparency.

Because one thing holds without exception: Not having been compromised is no proof of security!

A system may appear untouched simply because it has not yet been sufficiently used, analyzed, or attacked. Or because vulnerabilities have not yet been discovered. The history and practice of IT security repeatedly demonstrate that many of the most critical flaws existed for years – unnoticed, until someone finally looked closely enough.

Claims Are Easy — Proof Is Rare

In marketing materials, almost anything can be claimed. What truly matters is whether those claims can be substantiated in a technically verifiable way. This is precisely where marketing rhetoric diverges from technical substance.

Real, resilient security requires more than well-chosen words. It rests on solid foundations:

- realistic and documented threat models

- explicitly defined security assumptions

- verifiable technical mechanisms

- and, above all, independent third-party assessment

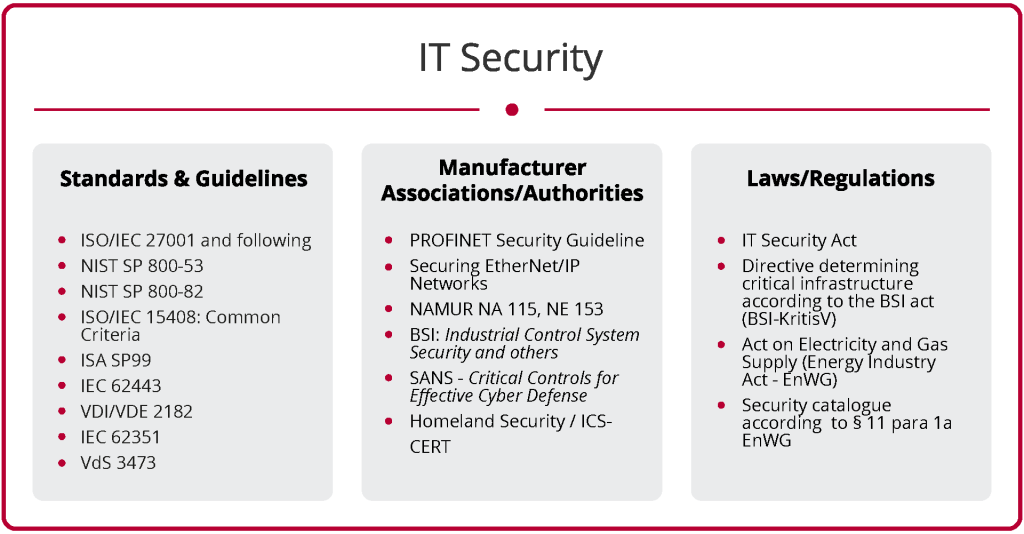

If any of these elements are missing, security remains a label not a verifiable property. In critical domains such as automotive security, industrial control systems, or networked infrastructure, this is a risk no one can afford to ignore.

Public Audits Instead of “Security by Magic”

Audits play a central role in IT security — but they, too, deserve a closer look. An audit is not a magical stamp that certifies “absolute security.” It evaluates clearly defined aspects of a system, such as a specific implementation, a process, or a narrowly scoped functionality.

The essential questions are always:

- What exactly was audited?

- According to which standard?

- With what scope?

Audits assess implementations, they do not automatically validate the correctness of the underlying design. They neither replace formal analysis nor public design documentation or peer review by the expert community.

This is why, at dissecto, we deliberately avoid “security by obscurity” or supposed secret magic. Our approach is based on established, well-documented procedures, established protocols, and comprehensible implementations. Security does not emerge from secrecy, but from technical transparency and verifiable substance.

Complexity Is a Risk

The more complex a system becomes, the larger its attack surface. This is not a matter of opinion, but an empirically proven reality in IT security.

Complex architectures not only complicate maintenance, they also make it harder to understand security-relevant relationships. Errors tend to hide where systems become opaque and difficult to reason about.

Robust security architectures therefore benefit from:

- clearly defined responsibilities

- reduced, controlled complexity

- transparent and logically traceable structures

“Keep it simple” is not a design flaw – it is a fundamental security principle.

Openness Creates Verifiable Trust

The gold standard for trust in cybersecurity is not a single certificate or a persuasive promise. Trust emerges from the combination of multiple verifiable factors:

- openly documented specifications

- reproducible builds

- independent code audits

- bug bounty programs

- transparent security communication

- and a community that actively attacks, questions, and improves a system

This is exactly where HydraVision comes into play.

Our test methods, assumptions, and results are transparently documented. Researchers and security professionals can analyze them, reproduce them, and publish their findings. HydraVision does not treat security as a product promise, but as a continuous, verifiable process.

Described ≠ Proven

A recurring problem in the industry is the conflation of:

- described properties

- and proven properties

As long as there is no technical specification, no reproducible evidence, and no independent validation, security remains a collection of promises. The stronger the promise, the higher the bar must be for transparency and proof. In IT security, the rule applies: Extraordinary claims require extraordinary evidence.

Closed Source and the Limits of Trust

Closed systems can be secure. That is undisputed. However, they inevitably require trust in the manufacturer. Trust alone, however, is not a sufficient security concept, especially in safety-critical environments such as automotive, industrial systems, or critical infrastructure.

Our ambition is different: We don’t want to believe in security – we want to be able to verify it.

Conclusion: Security Is Verifiable Engineering, Not a Vision

This perspective is not directed against developers or manufacturers. It is directed against a mindset. Security does not emerge from persuasion, superlatives, or visions, but from transparent, verifiable engineering. As long as security claims are not measurable, testable, and comprehensible, caution is warranted.

Because in IT security, what matters is not the loudest promise – but what can actually be verified.

Do you have questions or need support?

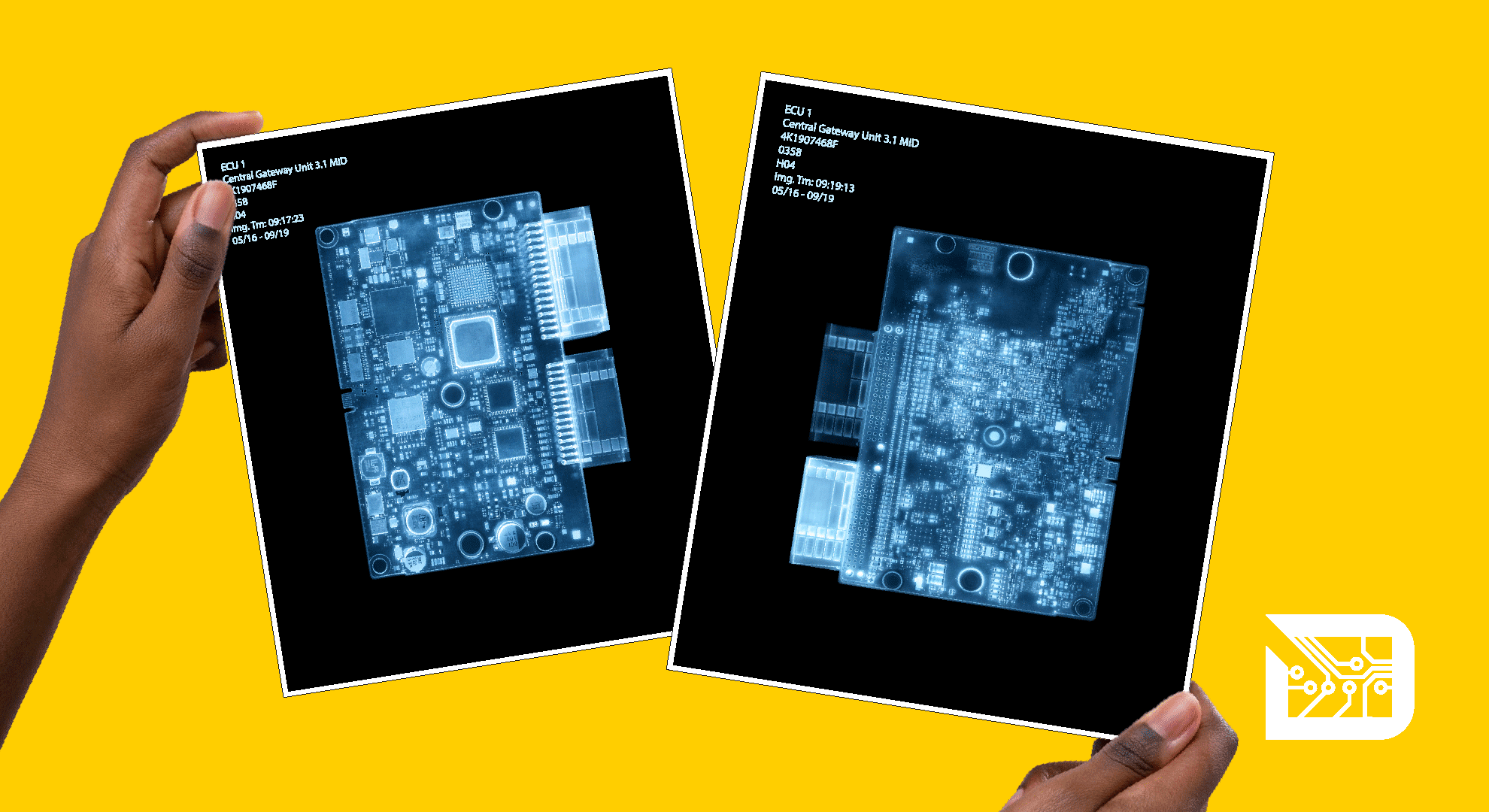

We’re here to help! Contact us with any questions about our HydraVision Security Test Environment or our penetration testing services for ECUs, vehicle networks, and embedded systems.

Skillpoints to spend? Check out our Cybersecurity Workshops and ScapyCon, our annual conference for cybersecurity aficionados!